Maximizing GPU Utilization to Near ~100%

Revolutionary Intelligence! Your Supercomputing '24 Recap

Our NR1-S® AI Inference Appliance demonstrated software readiness, ease of use, and a 50-90% price/performance advantage versus AI-host CPU architecture in AI Inference servers at Supercomputing 2024 (SC24) in Atlanta in November. NeuReality demonstrated how it’s shaping the future of AI Inference - by thinking radically differently at the deep systems and architecture level.

But the real news was never-before-seen Large Language Model demonstrations of Llama 3 and Mistral running on NR1 server-on chips to super boost complimentary AI Accelerators to near ~100% maximum utilization! Unlike other solutions, NR1 replaces today's AI-host CPUs to unleash the full capability of GPUs; and in fact, any XPU.

Revolutionizing AI Inference

The NR1-S AI Inference Appliance impressed SC24 attendees - customers, partners, analysts, and press - with its ability to achieve full GPU utilization to near 100% capability - outperforming traditional AI-host CPU and NIC architectures that limit today's GPUs to <50% utilization in AI inferencing.

NeuReality doesn't compete with AI Accelerators - we make them better.

Our agnostic, purpose-built NR1 architecture, powered by cutting-edge 7nm NR1 server-on-chips, cuts down on silicon waste and energy use.

This means we can offer a whopping 50-90% price/performance gains and give you the best bang for your buck per AI token.

Plus, it opens up exciting new revenue streams for cloud service providers, government, and businesses of all sizes and industries.

Customer-Friendly and 3x Faster Time-to-Market

NeuReality makes rolling out your Generative AI projects a breeze with ready-to-go software and APIs, helping any business or government ramp AI solutions 3x faster and more cost-effectively than ever before.

To the right, we demonstrated an enterprise-ready Conversational AI LLM that handles the entire end-to-end function of a customer call center with Llama 3-ready software. It's so easy to install and to use, that it reduces the need for complex software porting and high labor hours for IT.

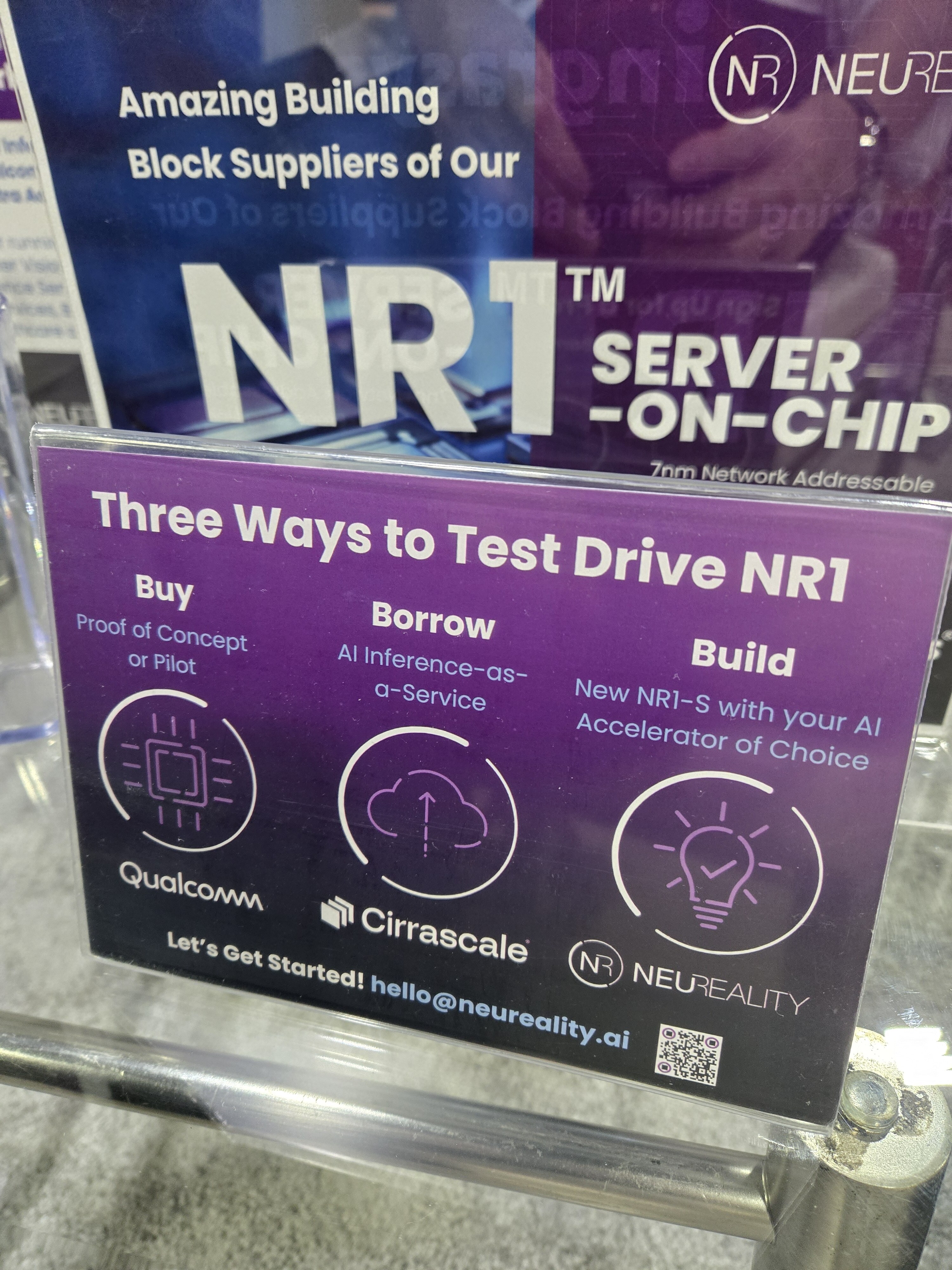

Our NR1-S Appliance housing Qualcomm® Cloud 100 Ultra accelerators is now deployed with Cirrascale cloud services, and separately with on-premise AI servers with a major Fortune 500 financial services customer.

It’s a once-in-a-generation architecture shift, where NR1 removes the traditional AI-host CPU bottlenecks that make AI Inferencing so costly and energy inefficient. Plus, the chip handles the AI pre and post-processing with ready-made compute graphs for each AI query type.

Watch Live Performance Demos

See it to believe it. CEO Moshe Tanach walks you through each of our groundbreaking demonstrations - looking inside the ready-to-go NR1-S AI Inference Appliances, enterprise-ready LLM solutions, and competitive performance advantages over CPU-reliant AI Accelerator systems.

- Inside the NR1-S AI Inference Appliance

- Maximizing Your AI Accelerator Investment with NR1 (100% Utilization)

- Transforming Data Centers with NeuReality

- NR1 Competitive Advantage Over CPU-Centric Architecture

Want a deeper dive with detailed technical and business performance metrics and comparisons to CPU-centric? Book a meeting below! Or skip the line and ask for a personal demo or sign up to get your Proof of Concept on-site or through a cloud service provider.

Value and Benefits to You

- Ready-Made, Plug-In AI Inference Solution: unique, simple experience with one-of-a-kind super boosted NR1-S AI Inference Appliance with ready-made software and APIs all in one out-of-the solution. You’ll be up and running in less than one day to hit the ground running. Go to market 3x faster with your LLM solutions (like Llama, Mistral), while reducing both your cost and time porting from Python to NR1 in far fewer steps.

- Unmatched Performance with Any AI Workload: With NR1-S, you can super boost any AI Model/Workload to near 100% utilization plus linear scalability versus limiting, conventional AI-host CPU architecture with <30-50% GPU utilization today. NeuReality demonstrated Llama 3, Mistral, Computer Vision, Natural Language Processing and Automatic Speech Recognition with anywhere from 50-90% performance gains and 13-15x greater energy efficiency at the lowest cost per AI query.

- Your Ticket to Acquiring New Customers: Your ability to win new markets and customers by delivering superior performance per dollar and per watt is unlimited. The NR1-S improves your time-to-market for great customer experiences with our easy-to-use and affordable-to-run AI Appliance. You will avoid *surprise* high operational with costs with NR1 versus AI Inference built on more costly and inefficient CPU architecture. And that means best-in-class Total Cost of Ownership.

Test, Try and Where to Buy! Experience Revolutionary AI

- Talk to Our Experts

Schedule a meeting with one of our experts to explore how NeuReality can accelerate your AI initiatives. - Get in Touch

Contact us here to discuss your AI serving needs and tailored solutions for SDKs and ready-to-go LLM applications. - Test and Try Our NR1-S Appliance

Experience it to believe it. We will coordination or visit with one of our customers. Contact Jim Wilson, VP Sales, to set it up.

Stay Connected

Stay informed about NeuReality’s cutting-edge innovations and updates:

Follow us on LinkedIn, Twitter, and YouTube for the latest news.

Bookmark our blog for ongoing insights into AI inference and data center transformation.

Thank you for joining us at SC24. Let’s continue driving the future of AI together!