NeuReality Redefines the AI Head Node with Arm Neoverse V3

AI has the potential to transform nearly every sector – from enhanced medical diagnostics, industrial automation and safety at work to increased innovation and greater productivity. However, 88% of AI proof of concepts don’t make it to production deployment. While optimism is high, skyrocketing costs and complexity to support and scale AI continues to be a challenge. But it doesn’t have to be this way.

At NeuReality, we saw early on that even if the GPU evolution is happening at breakneck speed, the efficacy of this is lost if the head node can’t keep up. The head node must be re-imagined from the ground up to deliver the orchestration and resource management to distributed GPU setups in data center environments. AI systems need to be purpose-built, not just repurposed. This was our goal with the NR1 AI-CPU: to create a purpose-built heterogeneous AI head node that can host any GPU and deliver a scalable, energy-efficient inference system with great performance at a lower cost.

Demand continues to grow for AI-ready data center infrastructure to power inference at scale, from industries including financial services and health and life sciences. Our work with Arm has played a huge role in our success with NR1 – success that we look forward to building on as we now begin to introduce our next generation NR2 chip.

Building the Ultimate AI Head Node with Arm Neoverse

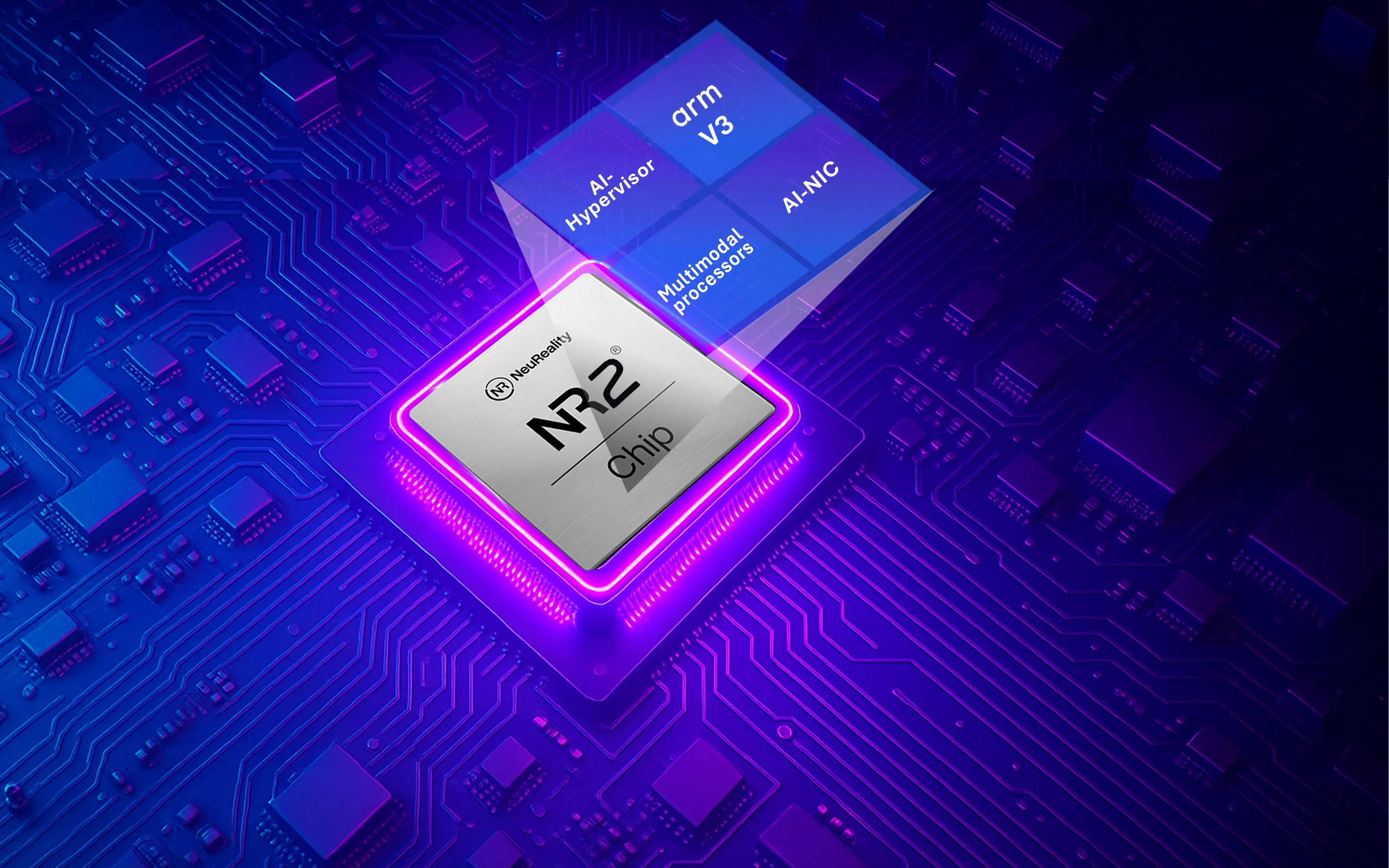

AI’s potential and ROI are being bottlenecked by one-size-fits-all legacy architectures that try to serve as head node CPUs, causing performance, throughput and TCO to suffer. With the NR1 AI-CPU, we took a different approach to compute that is purpose-built using Arm Neoverse cores. We reshaped the legacy CPU head node into a heterogeneous compute device, embedded with DSP and custom Audio and Computer Vision Codec processors wrapped around a novel AI-Hypervisor that offloads data movement and processing. An embedded AI-NIC manages both incoming/outgoing traffic (north-south) and internal data transfers (east-west). It allows communication between clients and servers, server nodes, and GPUs across different servers or racks. Our AI-Hypervisor together with Arm Neoverse cores enables the right orchestration layers driving the maximum utilization and efficiency.

Building on the work we did with NR1, we’ve deepened our collaboration with Arm to deliver our next-generation NR2 chip powered by the Arm Neoverse Compute Subsystems (CSS) V3. Together, we’re redefining what’s possible at the silicon and system level for AI inference and training.

The Neoverse V3 cores deliver higher single-threaded performance crucial for many AI tasks. With an enhanced memory subsystem, built-in support for advanced interconnect technologies, and optimized software frameworks and libraries, the Neoverse CSS V3 was designed to excel at modern AI workloads. It is the best candidate to be the foundation of NeuReality’s next generation AI-CPU. Additionally, Arm’s mature software ecosystem meets the demand for software readiness and interoperability.

NR2 will take everything we’ve learned with NR1 and amplify it. We will have more to share on NR2 soon, but today we are excited to provide some of the new capabilities, including:

- Up to 128 cores per package, optimized for inferencing and training workloads at scale.

- Deeper integration between AI-CPU and AI-NIC for real-time model coordination, micro-service-based disaggregation, token streaming, KV-cache optimizations, and inline orchestration.

- Provide a built-in AI-Hypervisor and an embedded AI-over-Fabric network engine to streamline data flow between AI clients and servers, and within large AI pipelines with high efficiency and low latency.

- Deliver native compilation and runtime flow integration for seamless deployment.

The result won’t just be a more powerful version of what came before. It’s a new class of AI head node, capable of managing inference and training workloads with unmatched efficiency.

Together we are driving the architectural shift the industry needs - a pathway to open, efficient, AI-optimized infrastructure. We are building the AI head node of the future, designed for performance, openness, and scale from silicon to system to software.

Look for more details soon on the NR2.

.png?width=352&name=unnamed%20(3).png)